TALK TO AN EXPERT TODAY

United States

2024 Hickory Road suite 208, Homewood, IL 60430, USA

United Kingdom

10 John Street, London WC1N 2EB, UK

Romania

Strada Republicii 87, Cluj-Napoca, Romania

Machine intelligent actors are entering the clinical IT space, as consumers of medical images and creators of diagnostic findings. These AI results are another component of data exchanged between PACS/ VNA and connected systems. While the immediate goal is to improve the accuracy and efficiency of image interpretation to inform the clinical care team, added benefits become possible when AI results are coded and structured in an industry standard for machine consumption. For example, reporting software can automatically incorporate AI findings into the physician report to improve the consistency of expressing findings with adherence to best practice.

Machine Learning (ML) is data hungry. The cost and effort to curate and label images is expensive and can limit development of AI. And the cost is not merely the initial training. AI models must periodically retrain to keep pace with changes in image acquisition, protocols, and practice. Regulatory compliance requires performance monitoring and validation with changes to the AI model, its intended use, or its inputs (such as increasing the range of images to include another modality manufacturer). Standardized coding of AI results can lower these costs. Indeed, the frequency of image reads by machine intelligent actors may eventually approach, or exceed, that of patient care use. The stored images, when correlated to coded diagnostic findings are of enormous value to develop AI models.

Coded AI results can extend and feed data registries such as the ACR and ACC national registries for Radiology and Cardiology. Providers use this information for self-assessment of performance and quality improvement programs.

Because of these developments, DICOM and IHE are directing much attention to develop the standards needed to incorporate AI into medical imaging IT. These new changes to the standards will add new product requirements for data storage, retrieval, display, and data migration.

Over the years, the rate of medical image production has increased. Images are larger, higher resolution, volumetric, more sensitive, and multiparametric. The sheer volume of images, pixels, and voxels acquired during a procedure can be huge. Moreover, innovative imaging modalities detect an expanding range of physical, functional, physiological properties. DICOM continues to add supplements to accommodate advances in medical imaging technology that provide greater detail and diagnostic value. The information deluge threatens to overwhelm the capacity of radiologists and other medical imaging specialists to adequality review the images, with growing risk of missing significant features.

With recent advances in deep learning and inexpensive graphics processing units, there is now a surge in machine intelligence tools that can support the visual diagnostic tasks to navigate, identify features, and detect disease. So-called computer assisted detection (CADe) and diagnosis (CADx) have been around for decades, though adoption has been limited and specialized (mammography, cardiovascular, chest, colon, OB-GYN). Deep learning drastically lowers the barrier to develop such algorithms. Recently, deep learning algorithms have been emerging in nearly every modality and specialty. Machine intelligence can alleviate clinical workload and improve efficiency, by directing clinician attention to areas of suspicious features, segmenting and classifying tissue and degree of disease.

Machine intelligence can digest large, complex, feature rich image data to descriptive and quantitative values, without intra-observer variability, and with potential improvements in sensitivity and specificity. AI can analyze image data without human perceptual and the visual limitations of the retina, to detect and characterize features difficult for humans to visualize. As such, AI reduces the human workload while improving accuracy, presenting relevant results to the clinician, and sparing time-consuming visual tasks on large image sets.

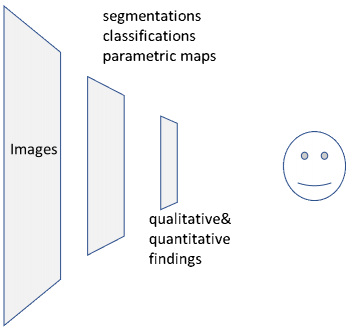

As illustrated in the diagram below, the dominant workflow without AI/CAD has one type of consumer: a radiologist or other physician or specialist, who reviews the images on a workstation and delivers a summary of results as findings, impressions, and recommendations.

With the adoption of artificial intelligent models, machine consumers digest images and deliver results as shown in the lower half of the diagram. The results output by the AI model may include findings such as presence or absence of abnormalities, locations of these findings in the image set, measurements (diameters, volumes, etc.), quantitative tissue properties (e.g. elasticity, perfusion), and tissue classifications such as segmented lesions or tumors.

Currently, most AI models encode their results graphically for human eyes, as synthetic images in DICOM Secondary Capture, annotations in DICOM GSPS, or even non-DICOM. These are acceptable for human consumers but not for machine consumers. With a standardized encoding, workstation software can use AI results to optimize image interpretation tasks. Software tools and other AI models can longitudinally track progression of lesions and compare current measurements and findings to those of prior encounters. If not coded in DICOM for consumption by software tools for machine consumption, the value of the AI is not fully realized.

The foundation to encode findings for machine consumption has been available in DICOM for two decades in DICOM Structured Reporting (SR) though adoption has targeted specialties that use CAD and where measurements are important. DICOM Part 16 specifies the templates and controlled terminology drawing from controlled terminology in DICOM, SNOMED, UMLS, LOINC, UCUM and others.

As mentioned, current AI products are not using DICOM to encode results for machine consumption. Recently, the IHE published AI Results Integration Profile Revision 1.1 for Trial Implementation on July 16, 2020. This profile specifies encoding requirements and DICOM Part 16 Templates to use with the Comprehensive 3D SR SOP Class. The types of results communicated include:

The AI Results profile also includes some other DICOM SOP Classes: Segmentation; and Parametric Maps for deep learning saliency maps, tissue properties or classifications that scale to the underlying image; and Key Object Selection, intended as an aid for manual navigation.

The IHE AI Results profile does not specify code sets for general findings except anatomical site concepts (SNOMED), units of measure (UCUM), and some others. However, there is much to leverage in the efforts in professional societies such as the American College of Radiology (ACR). Reporting and Data Systems (RADS) vocabulary from the ACR provides standardized terminology and assessment structures to characterize findings, e.g. the well-known Breast Imaging Reporting and Data System (BI-RADS). Other ACR codes include Common Data Elements for Radiology (CDE) and RadLex.

When gaps in coded terms exist, standards developers and professional societies work together to add missing concepts into the appropriate standard. Strain imaging echocardiography is a recent innovation that uses Speckle-Tracking-Echocardiography (STE) and deep learning to segment the myocardium and estimate motion to compute myocardial strain throughout the cardiac cycle. This new modality yields comprehensive and quantitative myocardial function. Global and regional strain indices aid clinical decisions. Leveraging the joint effort of the American Society of Echocardiography (ASE) and the European Association of Cardiovascular Imaging (EACVI) to standardize strain imaging measures, DICOM WG12 Ultrasound and WG1 Cardiovascular are now defining code sets for AI models that need them. When complete, vendors of AI models should specify DICOM conformance, including code sets, in their DICOM Conformance Statement. Doing so helps buyers assess and choose products and assists development of compatible products.

Related standards effort is ongoing:

Implementation experience and tools have been published:

AI acceptance will stimulate encoding automated image findings into standard structured, coded reports for both machine and human consumers. As AI results grow in quantity and prominence in clinical workflow, motivation for results interoperability will increase, with an emphasis toward semantic machine consumption rather than merely graphic and text display. Coded AI results in conjunction with their underlying images generate a new wealth of data to develop and refine AI models. DICOM solutions and data migrations will place higher priority on DICOM Structured Reports. The motivation to preserve AI results is likely to reach parity with DICOM images.

2024 Hickory Road suite 208, Homewood, IL 60430, USA

10 John Street, London WC1N 2EB, UK

Strada Republicii 87, Cluj-Napoca, Romania